Forward-looking: As AI workloads reshape computing, AMD is exploring a dedicated neural processing unit to complement or replace GPUs in AI PCs. This move reflects growing industry momentum toward specialized accelerators that promise faster performance and greater energy efficiency – key factors as PC makers race to deliver smarter, leaner machines.

AMD is exploring whether PCs could benefit from a new kind of accelerator: a discrete neural processing unit. The company has long relied on GPUs for demanding workloads, but the rise of AI-specific hardware opens the door to something more efficient and specialized.

Rahul Tikoo, head of AMD’s client CPU business, told CRN that the chipmaker is in early talks with customers about what such a chip might look like and where it could fit.

“We’re talking to customers about use cases and potential opportunities for a dedicated accelerator chip that is not a GPU but could be a neural processing unit,” Tikoo said during a briefing before AMD’s Advancing AI event last month.

The idea arrives as PC makers like Lenovo, Dell Technologies, and HP seek ways to offload AI processing from traditional CPUs and GPUs. Dell has already taken that step with its new Pro Max Plus laptop, which features a Qualcomm AI 100 inference card – touted as the first enterprise-grade discrete NPU for PCs.

Tikoo declined to reveal when AMD might launch such a chip, stressing future plans remain under an NDA. However, he suggested the company has the pieces in place to move quickly if it decides to proceed, making the leap to a discrete NPU plausible.

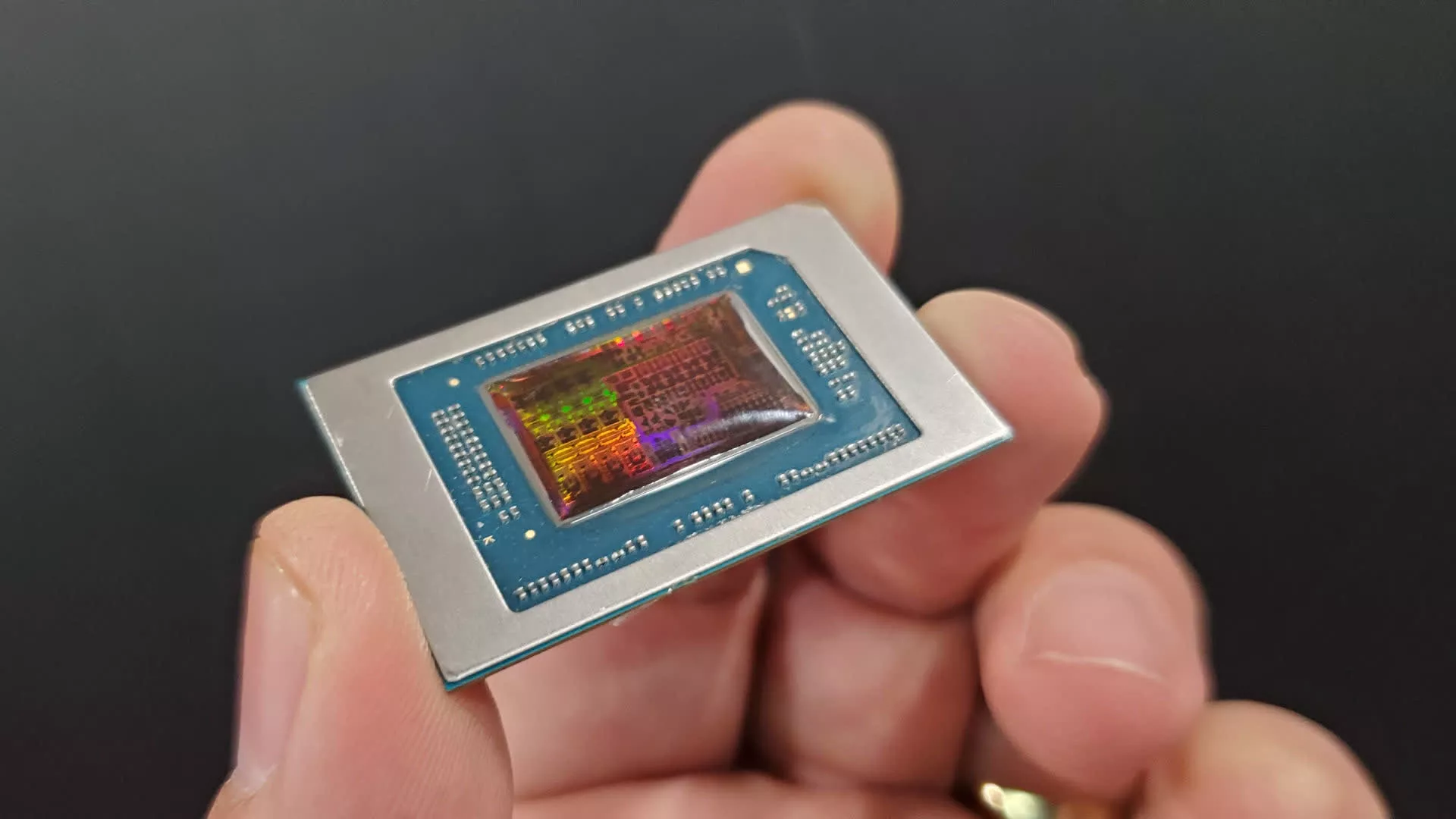

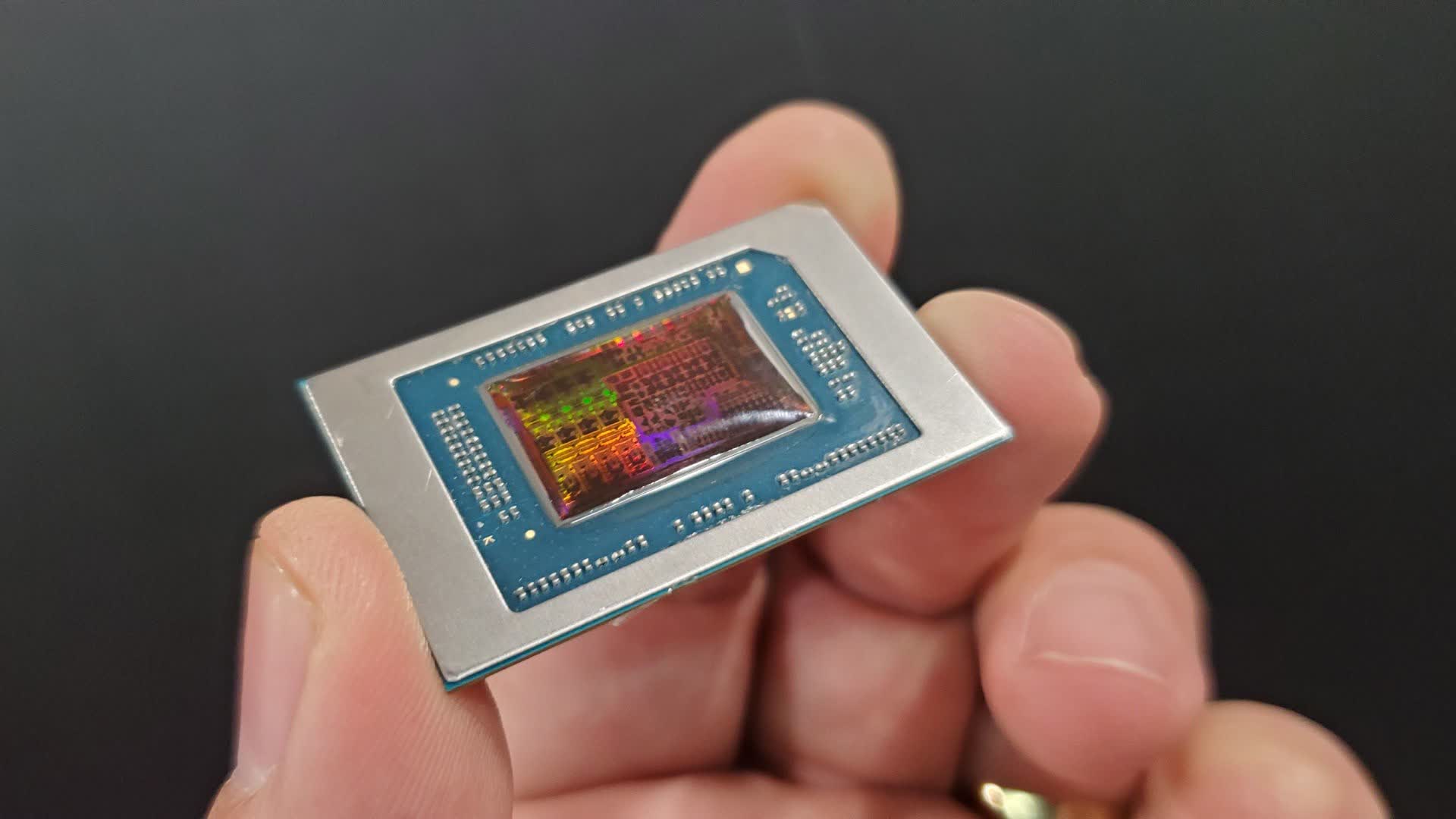

AMD’s efforts to embed AI capabilities into Ryzen processors could provide the foundation. The company has used AI engine technology from its Xilinx acquisition as the basis for NPU blocks in its latest chips – a move that could scale into stand-alone products.

Christopher Cyr, CTO of Sterling Computers, said the technology roadmap is already clear.

“If this particular NPU tile creates 50 TOPS [trillion operations per second], tack on two of them, make it 100 TOPS,” Cyr said.

He emphasized that any discrete NPU from AMD must deliver meaningful performance gains without consuming the kind of power or generating the heat typical of a stand-alone GPU. Efficiency is critical for PC makers striving to maintain thin designs and long battery life while adding AI capability. Without those energy savings, a discrete NPU risks becoming just another bulky, heat-producing component rather than a genuine alternative to today’s GPU-driven solutions.

Also read: Opinion: The rapidly evolving world of AI PCs

Cyr cited AMD’s Gaia open-source project, designed to run large language models locally on Ryzen-based Windows PCs, as evidence that the company is laying the groundwork for a broader AI push.

“They’re making really good inroads towards leveraging that whole ecosystem,” he noted.

While GPUs have been the default accelerator for years – and Nvidia would like to keep it that way – NPUs are reshaping the landscape. Intel, AMD, and Qualcomm have integrated NPUs into their latest processors. Still, there is growing momentum for discrete versions that deliver higher performance without the heat and power draw of GPUs.

Some of the first attempts came from Intel, which equipped a 2023 Surface Laptop with a Movidius VPU before its Core Ultra chips had onboard NPUs. Dell’s latest workstation takes things further with a Qualcomm card pushing 450 TOPS in a 75-watt envelope. Startups like Encharge AI are entering the fray too, promising NPU add-ons with GPU-level compute capacity at a fraction of the cost and power consumption.

AMD’s discrete NPU would broaden its product lineup beyond CPUs, GPUs, and integrated accelerators. This addition would offer OEMs a new option to integrate AI capabilities into PCs, potentially providing a leaner and more energy-efficient alternative to today’s GPU-heavy setups.