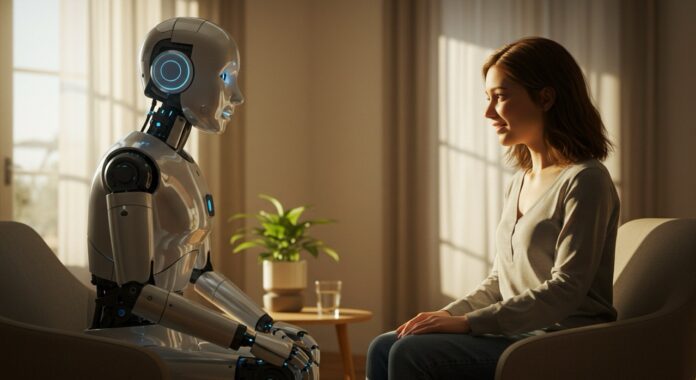

The state of Illinois has taken an unprecedented step in prohibiting the use of artificial intelligence (AI) as a therapist, regulating the use of these technologies in the field of mental health.

The law, called Wellness and Oversight for Psychological Resources (WOPR), was sanctioned by Governor J.B. Pritzker and prevents applications or AI-based services from making clinical decisions, such as diagnostics or psychological treatments. The fine for those who disrespect this rule can reach US$ 10,000.

The first state to enact legislation prohibiting the use of AI tools such as ChatGPT to provide therapy.

The initiative comes amid the increasing use of AI by people seeking emotional support or even company, which worries mental health professionals. Psychologists and social workers defend regulation to protect the public from possible damage caused by algorithms that pose as specialists without proper qualification. The new law guarantees that only licensed professionals can perform such clinical functions.

Despite the prohibition for therapeutic decisions, the law allows therapists to use AI for administrative tasks such as writing notes and planning. Wellness applications that offer meditation or non-clinical support, such as Calm, remain released.

However, services that position themselves as therapy substitutes, such as Ash Therapy, cannot operate in Illinois and already block access to state users.

- Prohibits the AI from making diagnostics or therapeutic decisions;

- Fine of up to $10,000 for offenders;

- AI allowed only for administrative tasks in clinics;

- Welfare applications without clinical function are not affected;

- AI therapy services, such as Ash, do not operate in Illinois;

In addition, the controversy intensifies with reports of users who develop delusions after prolonged interactions with chatbots, and OpenAI itself announced measures to reduce possible adverse effects, such as mandatory pauses during prolonged use of ChatGPT. The debate highlights the complexity of integrating AI in sensitive areas such as mental health, seeking balance between innovation and user safety.

In addition, the controversy intensifies with reports of users who develop delusions after prolonged interactions with chatbots, and OpenAI itself announced measures to reduce possible adverse effects, such as mandatory pauses during prolonged use of ChatGPT. The debate highlights the complexity of integrating AI in sensitive areas such as mental health, seeking balance between innovation and user safety.